Web scraping has come a long way. And yet, despite all this progress, scraping projects still fail every single day. All thanks to the scraper getting blocked.

IP blocking remains the first and strongest line of defense for most websites. That’s where smart IP rotation and geo-targeting come in. In this guide, we’ll break down how IP blocking actually works, why basic proxy setups often fail, and how to design scraping systems that look natural, distributed, and resilient.

How Websites Identify and Block Scrapers

Before diving into solutions, let’s understand how websites actually think about traffic.

1. IP Address as an Identity Signal

To websites, an IP address acts like an origin fingerprint, a way to identify where traffic is coming from and how it behaves over time.

When a user requests in volume and repetition, it raises a red flag. Rapid, repeated requests following the same structure look automated. Even if your scraper behaves politely, repetition from a single origin quickly turns into suspicion.

2. Trust Scoring Explained

Most websites assign trust scores to incoming traffic based on where it comes from and how it behaves.

- Clean residential IPs usually sit at the top of the scale. They look like real users browsing from real locations, so they’re given high trust.

- Public Wi-Fi or shared IPs fall somewhere in the middle. Since many users share them, they’re watched more closely but not immediately blocked.

- Datacenter IPs, especially when they come from known hosting subnets, start with low trust.

Once you see blocking this way, it becomes clear why smart IP rotation and geo-targeting work so well. They help your traffic stay on the right side of that trust scale.

Understanding IP Basics

| Concept | What It Means | Why It Matters |

| IPv4 | The most widely used Ip format on the web today | Most websites are optimized to trust and handle IPv4 traffic, making it more reliable for scraping |

| IPv6 | Newer IP format with a much larger address space | Often assigned lower default trust scores due to limited real-user traffic and weaker reputation history |

| IP Address | Your scraper’s visible network identity | Repeated requests from the same IP quickly build patterns that can trigger blocks |

| Request Volume | Number of requests sent from one IP | High volume + repetition signals automation and raises suspicion |

| /24 Subnet | A block of 256 closely related IPs | Websites often evaluate reputation at the subnet level, not just per IP |

| Bad Neighbors | Other IPs in your subnet that were abused | One heavily abused IP can damage trust for the entire subnet |

| Trust Scoring | A probabilistic reputation score assigned to traffic | Determines whether requests pass, get slowed, hit CAPTCHA, or get blocked |

| Blocking Behavior | Gradual restrictions, not instant bans | Explains why scraping may work briefly before failing |

IP Metadata: The Silent Fingerprint Most Scrapers Ignore

Most scraping setups ignore this entirely. And that’s often where things go wrong.

What IP Metadata Reveals

Some of the most important information includes:

- ISP Name: Identifies who owns or provides the connection. Consumer ISPs look normal. Hosting providers do not.

- ASN (Automated System Number): Group IPs by network ownership. ASNs tied to cloud providers or data centers are heavily monitored.

- Country & Region: Indicates geographic origin. Mismatches between IP location and expected user behavior raise suspicion.

- Connection Type: Websites can often infer whether traffic comes from residential broadband, mobile networks, corporate links, or data centers.

Individually, these signals might not block a request. Together, they form a silent fingerprint that strongly influences trust scoring.

Why Datacenter Metadata is a Red Flag

Most of these IPs are owned by well-known hosting companies. Their ASNs are public, heavily cataloged, and commonly associated with automated traffic. From a website’s perspective, traffic coming from these networks starts at a disadvantage.

Datacenter traffic often also shows patterned routing and ASN clustering. Requests originate from tightly grouped IP ranges, follow predictable paths, and appear at volumes that don’t match normal user behavior.

This is why datacenter IPs often hit CPTCHAs or blocks quickly, even when request rates seem reasonable.

Why Residential & Mobile IPs Score Higher

These IPs belong to ISP-linked ASNs that serve millions of users every day. Their traffic patterns are naturally noisy, uneven, and diverse, exactly what websites expect to see.

Mobile networks, in particular, rotate IPs frequently and distribute traffic across large pools. This creates a human-like distribution, where no single IP or subnet dominates request volume for long.

As a result, residential and mobile IPs typically start with higher trust scores and stay trusted longer, making them far more resilient for scraping tasks that need consistency over time.

How IP Tracking Actually Works at Scale

Modern anti-bot systems operate in layers of reputation, not single data points.

IP Reputation Databases

Most large websites rely on continuously updated IP reputation databases. These databases aggregate historical behavior across millions of requests, flagging IPs that show signs of automation, abuse, or abnormal traffic patterns.

Once an IP earns a poor reputation, that history follows it. Even if your scraper behaves politely, starting from a known “bad” IP puts you at an immediate disadvantage.

ASN-Level Scoring

Reputation doesn’t stop at individual IPs. Websites also score traffic at the ASN level, grouping IPs by network ownership.

If an ASN is heavily associated with bots or scraping activity, all IPs under that ASN start with reduced trust. This is why switching between IPs inside the same provider often changes nothing. To the websites, it’s still the same network showing up.

Subnet-Level Penalties

Subnets add another layer of filtering. When multiple IPs from a single subnet trigger suspicious behavior, the entire block can be penalized.

This is especially common with /24 subnets. Once a few IPs in that range are flagged, the rest inherit the reputation, whether they were involved or not. Clean IPs become collateral damage.

Relationship Graphs: IP —> Subnet —> ASN

The most effective systems don’t view these signals separately. They build relationship graphs that connect individual IPs to their subnet, and subnets to their ASN.

So when a request arrives, it’s evaluated in context:

- Has this IP misbehaved?

- Has this network done this before?

Trust is calculated across the entire graph.

Why Rotating Bad IPs Doesn’t Help

If you rotate through IPs that share the same subnet, ASN, or reputation history, you’re not really rotating at all. You’re just changing the last few digits while keeping the same identity underneath.

That’s why smart IP rotation focuses on where IPs come from, not just how often they change.

The Real Solution

Rotating IPs alone isn’t enough. The real fix is diversity.

Smart IP Rotation

Basic rotation strategies often rely on simple logic: one IP per request, move to the next, repeat.

Smart rotation does more than just cycle addresses.

First, it avoids reusing rejected subnets. If a subnet is already flagged, rotating within it only spreads the damage faster. Clean pools should stay isolated from contaminated ones.

Second, the rotation strategy should match the scraping goal:

- Session-based rotation works best for login flows, carts, or multi-step interactions where consistency matters.

- Per-request rotation is better for large-scale data collection, where no single IP should stand out.

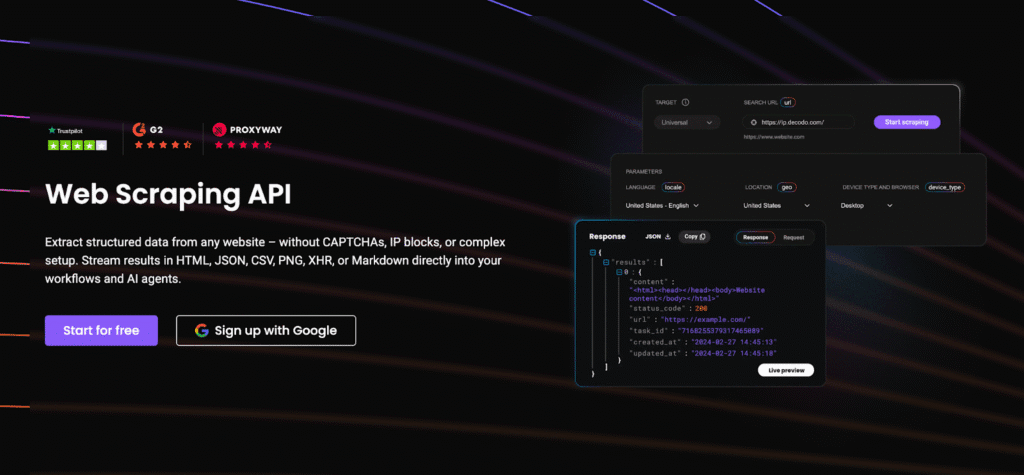

Scraping APIs, such as Decodo, design rotation logic around ASN diversity rather than just raw IP volume, which helps avoid shared-reputation traps at scale.

Geographic Distribution

Websites expect traffic to come from certain regions, and mismatches raise suspicion.

A good rule of thumb is to match IP location to the website’s primary audience or origin. A US-based site expecting US visitors will trust US IPs more than traffic coming from unrelated regions.

This is why US and EU IPs often perform better. They dominate consumer internet traffic, have mature ISP ecosystems, and blend naturally into expected usage patterns.

That said, global distribution isn’t always helpful. It works best when:

- You’re scraping region-specific content

- The site serves a truly international audience

It can hurt when:

- Requests jump across countries unrealistically

- Behavior doesn’t align with local usage patterns

Geo-targeting should feel natural, not random.

ASN Diversity

IP count is often treated as the main metric. In reality, ASN diversity matters far more than raw volume.

Consider this example:

- 10,000 IPs from a single ASN still look like one network.

- 1,000 IPs spread across 100 ASNs look like organic, distributed traffic.

Websites score trust at the ASN level because ASNs represent real ownership and routing boundaries. Spreading traffic across many ASNs reduces correlation, lowers pattern density, and keeps trust scores higher for longer.

Choosing the Right Proxy Type to Avoid Blocks

| Proxy Type | Core Features | Trust Level | When They Work Best |

| Datacenter Proxies | Hosted on cloud servers, fast, inexpensive | Low | Non-protected sites, internal testing, short-lived scraping |

| Residential Proxies | Real user IPs assigned by ISPs | Medium to High | Most scraping workloads that need scale and scalability |

| Mobile Proxies | IPs from mobile carrier networks | Very High | Heavily protected sites, login flows, sensitive actions |

| ISP Proxies | Static residential IPs from ISPs | High | Sticky sessions, accounts, long-running interactions |

Why Network Awareness Matters More Than Proxy Labels

One important detail often overlooked is where these IPs come from under the hood. Two residential proxies can behave differently if one comes from a single overloaded ASN and the other is spread across many clean networks.

This is where ASN-aware proxy pools make a real difference. Some providers, like Decodo, expose residential pools designed to distribute traffic across multiple ASNs, helping reduce subnet-level penalties and avoid poisoning entire IP blocks at scale.

Designing a Block-Resistant Scraping Architecture

Websites don’t just judge where requests come from; they also evaluate how those requests behave over time. A block-resistant setup aligns both.

1. Request Pacing & Burst Control

Introducing human-like pacing is a must. Instead of fixed delays, use small variations between requests. A few seconds faster here, a few slower there, creates a rhythm.

Just as important is avoiding predictable spikes. Spreading requests evenly over time keeps activity below detection thresholds and preserves trust longer.

2. IP + Header Consistency

To stay consistent:

- Match IP location with Accept–Language , timezone, and locale

- Use realistic user agents that align with the region

- Avoid impossible combinations, like a mobile IP sending desktop-only headers, or a US IP consistently requesting content in an unrelated language

3. Rotation Strategy by Task

Different tasks carry different risk levels, and the rotation strategy should reflect that.

- Search and listing pages typically tolerate higher rotation and broader distribution. These pages are accessed frequently by real users, making per-request rotation more effective.

- Detail pages often benefit from slower pacing and short-lived sessions to avoid repetitive access patterns.

- Login-based scraping requires stability. Session-based rotation with sticky IPs helps maintain continuity and avoid re-authentication challenges.

- Anonymous scraping can rotate more aggressively, as there’s no session state to preserve.

Common Mistakes That Still Get Scrapers Blocked

Here are some of the easiest mistakes to make:

Rotating IPs but Staying in One ASN

To websites, this looks like the same network sending traffic from slightly different addresses. Once that ASN’s reputation drops, rotating within it offers little protection.

Using Residential IPs with Datacenter-Like Behavior

This mismatch is especially damaging because it violates expectations. Residential traffic is assumed to be human-like. When behavior contradicts that assumption, trust drops faster, and blocks arrive sooner.

Ignoring Regional Mismatch

When IP location doesn’t align with language, content access patterns, or target markets, it raises suspicion. Even small mismatches add up when repeated at scale.

Reusing Burned Subnets

Rotating back into a poisoned range often leads to immediate blocks, sometimes even before the first request completes. Without subnet awareness, rotation can accidentally recycle those very IPs that caused the problem in the first place.

When Managed Scraping APIs Make Sense

At one point, the challenge stops being about scraping logic and starts being about infrastructure.

The Hidden Cost of Self-Managing

Maintaining your own proxy and rotation system means constantly managing moving parts:

- Monitoring IP reputation and burn rates

- Tracking subnet and ASN health

- Adjusting rotation logic as sites change defenses

- Handling geo-targeting, headers, pacing, and retries

- Replacing poisoned IP pools before they cascade into failures

What Managed Scraping APIs Abstract Away

Instead of exposing raw proxies, they abstract the most failure-prone layers of scraping:

- IP rotation that adapts automatically.

- ASN diversity backed into the network pool.

- Geo-targeting aligned with real-user traffic patterns.

In practise, Decodo is built to handle these layers automatically, so developers don’t need to maintain proxy reputation and rotation systems internally.

When This Trade-Off is Worth It

Managing APIs is especially useful when:

- Scraping is a means to an end, not the core product.

- Teams want reliability without constant tuning.

- Engineering time is more valuable than infrastructure control.

Solutions like Decodo’s Web Scraping APIs are designed around the exact trade-off, handling IP rotation, ASN diversity, and geo-distribution automatically for teams that don’t want to maintain complex proxy logic themselves.

Managed APIs are better because they’re simpler. And for many teams, simplicity is what keeps scraping projects alive long-term.

IP blocking isn’t something you fix with a single tool or setting. It’s the result of how traffic looks in aggregate, across IPs, subnets, ASNs, regions, and time. What matters in understanding why blocks happen in the first place. Once you design around trust rather than volume, scraping stops feeling fragile and starts becoming predictable.

Check out our other expert guides here:

- Best Web Scraping Proxies in 2025

- Large-Scale Web Scraping – A Comprehensive Guide

- 10 Best AI Web Scraping Tools in 2025

FAQs

Always respect site terms of service, rate limits, and robots.txt where applicable, and avoid scraping personal or sensitive data. If data is copyrighted, gated, or personally identifiable, get legal advice before collecting or storing it.

Scrape public data only, avoid harming site performance, and don’t interfere with real users. Keep request volume human-like, identify downstream data use responsibly, and ensure data isn’t misused or resold harmfully.

1-5% block or CAPTCHA rate is typical. Sudden spikes usually indicate IP reputation decay, incorrect geo-alignment, or pacing issues. Consistent double-digit block rates suggest structural design problems.

Track how often an IP triggers CAPTCHAs, 4xx/5xx responses, rate limits, or abnormal latency. If these signals increase over time for the same IP or subnet, trust is dropping and that IP is burning. Good systems retire IPs before they fully fail.

Disclosure – This post contains some sponsored links and some affiliate links, and we may earn a commission when you click on the links at no additional cost to you.