Scraping Reddit for data is more difficult than ever in 2025. Reddit blocks more scraping methods every month, and most old tools fail. Scripts that once worked are obsolete.

Extraction now hits paywalls, constant captchas, or instant blocks. Manual techniques waste time and yield errors. In 2025, anyone serious about collecting Reddit data needs software built to survive these updated defenses.

This blog cuts through legacy myths. It shows only the updated Reddit scrapers that actually work now. Proxy support, stealth modes, anti-fingerprint solutions, and JavaScript support are the current needs. Only use these tools if accuracy and efficiency are the goals.

What to Look for in a Reddit Scraper

- Stealth and Anti-Detection: Scraper must avoid Reddit’s rate limits, captchas, and browser fingerprint checks, or it will get blocked.

- Structure Data Output: JSON or CSV support is required for large-scale collection and analysis. Only scrapers with clear, organized output save your time.

- Comment & Thread Support: The Tool must extract both posts and complete, nested comment threads to preserve conversation context.

- Media Extraction: Must download images, videos, and external links from posts if full context is the goal.

- Scheduler & Automation: Should allow automated runs and scheduling for continuous data pulls without any constant manual setup.

Table of Contents

| 1. Reddit API |

| 2. PRAW |

| 3. Pushshift API |

| 4. Apify |

| 5. Bright Data |

| 6. Scraper API |

| 7. Outscraper |

| 8. Axiom.ai |

| 9. Octoparse |

| 10. Selenium |

Best Reddit Scrapers in 2025

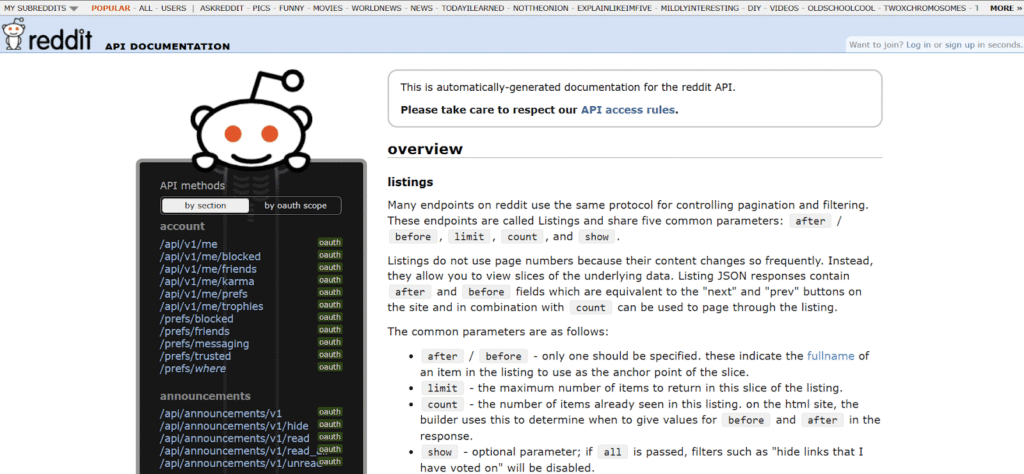

1. Reddit API

The Reddit API is the official channel provided by Reddit for developers to access and interact with Reddit’s extensive ecosystem programmatically. It enables retrieving data on posts, voting, commenting, and moderation tasks. The API enforces OAuth 2.0 authentication and rate limits to manage access and prevent any kind of abuse, making it suitable for applications requiring secure, real-time access to Reddit data. However, its rate limits and access restrictions can make large-scale scraping challenging, especially for bulk historical data.

Key Features:

- Data Retrieval: Access structured data for posts, comments, subreddits, and user profiles.

- User Interaction: Submit new posts, comments, votes, and send messages programmatically.

- Moderation Capabilities: Manage content approval, removals, and access moderation logs.

- OAuth Security: Uses OAuth 2.0 for secure user authentication and controlled API access.

- Rate Limits: Enforced limits to ensure fair use and system stability.

Pros

- Official and well-documented API with continuous updates and community support.

- Trustworthy and secure for real-time and interactive applications.

- Supports full Reddit interaction, including posting and moderation.

Cons

- Strict rate limits limit extensive data extraction or bulk downloads.

- OAuth setup adds technical complexity for new users or rapid testing.

- Certain data or actions might be restricted to protect privacy and platform integrity.

2. PRAW

PRAW is a Python library that wraps around the official Reddit API, which makes it simpler and more intuitive for developers to retrieve and manage Reddit’s data in Python environments. It abstracts complex API calls, handles rate limiting automatically, and presents Reddit data as Python objects. This makes it an ideal tool for scripting, automation, bot development, and data analysis tasks that involve Reddit content without needing to handle low-level API details.

Key Features:

- Pythonic Interface: Provides Python classes and methods to access Reddit posts, comments, users, and subreddits.

- Automatic Rate Management: Handles Reddit API rate limits and retry strategies internally.

- Comprehensive Object Models: Maps Reddit entities like submissions, comments, and authors to Python objects.

- Easy Integration: Suitable for quick scripts, bots, or larger data pipelines using Python.

Pros:

- Significantly reduces development time and complexity for Python users.

- Supports the entire range of Reddit API functions with clear, readable code.

- Active open-source community and well-maintained documentation.

Cons:

- Limited by the official Reddit API’s scope and restrictions.

- Requires an API key and user authentication to use effectively.

- Not suited for non-Python environments.

3. Pushshift API

Pushshift API is a third-party, community-driven RESTful API that is designed to provide extensive access to Reddit’s historical data archives, which surpasses the limitations of the official Reddit API. It stores and indexes billions of Reddit comments and submissions, enabling powerful search, filtering, and aggregation over extended time periods. Pushshift is widely used by researchers, analysts, and developers requiring large datasets, historical insights, and difficult query capabilities involving Reddit data going back many years.

Key Features:

- Historical Archives: Access to Reddit comments and submissions dating back to Reddit’s inception.

- Advanced Querying: Powerful search filters including time frames, subreddits, authors, keywords, and more.

- Bulk Data Download: Facilitates downloading large datasets suitable for research and data mining.

- Elasticsearch Backend: Offers efficient, scalable full-text search capabilities through an Elasticsearch service.

Pros:

- Unmatched access to large-scale Reddit historical data.

- Supports complex queries not possible with the official API.

- Crucial for academic research, social media analysis, and trend discovery.

Cons:

- Not officially affiliated with Reddit, hence potential policy or access changes.

- Some features are limited to verified users or moderators.

- Occasional service interruptions and uncertain long-term availability.

4. Apify

Apify is a cloud-based web scraping and automation platform that offers ready-to-use Reddit scrapers through its marketplace. It enables users, even those without programming skills, to scrape posts, comments, user profiles, and media from Reddit with simple setup and scheduling options. Apify handles challenges like proxy rotation and CAPTCHA solving to facilitate smoother scraping processes. It is suited for businesses and individuals needing scalable data extraction with minimal technical overhead, though scraper quality might vary as many are third-party-built.

Key Features:

- No-Code Scraping: Pre-built Reddit scrapers accessible through a user-friendly web interface.

- Automation and Scheduling: Allows setting recurring scraping jobs and automated data collection.

- Proxy and CAPTCHA Integration: Built-in solutions for overcoming common scraping obstacles.

- Data Export Flexibility: Supports JSON, CSV, and other formats for easy workflow integration.

Pros:

- Ideal for users without coding expertise.

- Integration of proxy management and CAPTCHA handling enhances reliability.

- Marketplace offers multiple scraper options catering to different needs.

Cons:

- Dependent on the maintenance and quality of third-party scrapers.

- Limited customization and control compared to bespoke solutions.

- Cost may grow exponentially with heavy usage and advanced features.

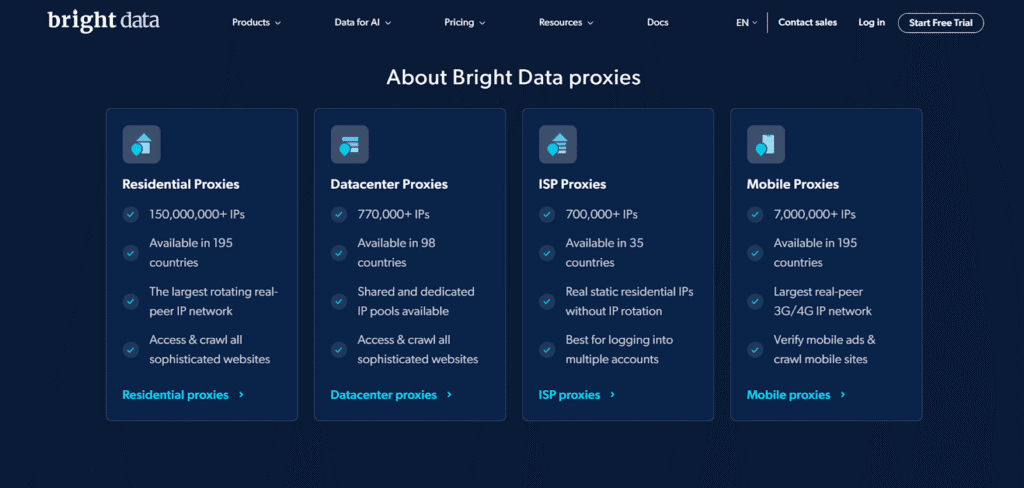

5. Bright Data

Bright Data is a leading enterprise-grade data collection service with a massive proxy network and advanced anti-bot bypass technologies that enable large-scale and stealthy Reddit data extraction. Its Reddit MCP server is designed to extract posts, comments, user activity, and full thread data while avoiding blocks caused by Reddit’s anti-scraping defenses. Bright Data offers extensive proxy coverage worldwide, detailed control through a sophisticated dashboard, API access for automation, and responsive support. This makes it the top choice for complex, high-volume Reddit scraping projects with demanding reliability and compliance requirements.

Key Features

- Extensive Proxy Network: Access to over 770,000 IPs across nearly 100 countries for rotating proxies.

- Anti-Bot Evasion Techniques: Sophisticated tools to bypass Reddit’s captchas, rate limits, and fingerprinting.

- Comprehensive Data Extraction: Captures posts, comments, user karma, and nested discussions accurately.

- Advanced Control & Automation: Real-time monitoring, customizable workflows, and accessible via API.

- Responsive Support: 24/7 enterprise-level customer service ensuring uptime and troubleshooting.

Pros

- Superior reliability & uptime for expansive, mission-critical scraping needs.

- Scalable infrastructure suited for large datasets and complex workflows.

- Strong proxy rotation and anti-detection capabilities minimize scraping failures.

Cons

- Premium pricing makes it less accessible for small users or low-budget projects.

- Platform complexity may require technical familiarity for efficient use.

- Bandwidth and usage caps can restrict extremely large-scale operations without additional cost.

6. Scraper API

Scraper API is a strong web scraping tool that is designed to handle proxies, browsers, and CAPTCHA automatically, simplifying the scraping of complex websites like Reddit. It abstracts the entire proxy management and request handling process so developers can focus solely on extracting data. Scraper API supports JavaScript rendering and retries failed requests, making it ideal for scraping dynamic content and avoiding blocks during high-volume data extraction.

Key Features

- Proxy & IP Rotation: Automatically rotates IP addresses from a vast proxy pool to prevent detection.

- JavaScript Rendering: Supports accessing content that requires JavaScript execution for loading.

- Automatic Captcha Handling: Solves Captchas without manual intervention to maintain steady scraping.

- Highly Scalable API: Handles large volumes of requests with retries and error handling built in.

Pros

- Minimal setup with fully managed proxy and CAPTCHA services.

- Supports complex, dynamic web pages needing JavaScript execution.

- Scale-friendly with consistent performance for large data needs.

Cons

- Costs can rise significantly with heavy usage.

- Less customizable for users wanting fine control over scraping logic.

- No built-in UI; primarily developer-focused API service.

7. Outscraper

Outscraper is a cloud-powered scraping service focused on simplicity and ease of use. It offers ready-to-use APIs for extracting data from multiple web services, including Reddit. It provides easy search and extraction of posts, comments, and user data with minimal configuration. Outscraper handles proxies, CAPTCHA solving, and data parsing on its end, delivering clean data for analytics, market research, and competitive intelligence.

Key Features

- One-Click APIs: Quick access for fetching Reddit posts, comments, and user profiles.

- Automatic Poxy Management: Ensures anonymity and bypasses scraping restrictions.

- CAPTCHA & Bot Detection Handling: Integrated mechanisms to reduce blocking risks.

- Flexible Data Formats: Outputs results in JSON, CSV, or Excel formats for easy use.

Pros

- User-friendly interface and minimal setup requirements.

- No need to manage proxies or CAPTCHA manually.

- Useful for smaller projects and fast data retrieval.

Cons

- Limited customization options for advanced users.

- API quotas may limit heavy or extended scraping efforts.

- Dependence on service availability and updates.

8. Axiom.ai

Axiom.ai is a no-code web automation and scraping platform that is geared towards business users and non-developers. It enables users to build custom scraping and automation workflows through an intuitive visual editor without any programming. Axiom supports scheduling, data export, multi-step workflows, and proxy management, making it accessible for extracting Reddit data efficiently without writing code.

Key Features

- Visual Workflow Builder: Drag-and-drop interface to create scraping and automation pipelines.

- Scheduled Runs: Automate scraping tasks on a set timetable or trigger-based.

- Proxy Integration: Built-in support for proxies to avoid detection.

- Multi-Format Export: Export scraped data in CSV, Excel, or JSON formats.

Pros

- No coding skills required, and it is accessible to business users.

- Flexible for a variety of scraping and automation scenarios.

- Supports recurring data collection with scheduling.

Cons

- May struggle with very complex scraping logic.

- Subscription pricing varies with usage and is potentially costly.

- Limited control compared to code-based scrapers.

9. Octoparse

Octoparse is a widely used visual web scraping software that is designed for both beginners and tech-savvy users. It provides a desktop client and cloud platform to build scraping workflows without coding via an easy-to-use point-and-click interface. Octoparse handles anti-bot mechanisms with built-in proxies and offers scheduling, cloud extraction, and multi-format exports, enabling efficient Reddit data extraction at scale.

Key Features

- Visual Workflow Creation: Simple point-and-click to design scraping tasks without coding.

- Cloud and Local Extraction: Offers both cloud-based scraping and local extraction options.

- Anti-Bot Bypass: Incorporates proxy rotation and CAPTCHA solving tools.

- Export Flexibility: Supports exporting data in Excel, CSV, HTML, and databases.

Pros

- User-friendly for non-programmers with powerful features for pros.

- Supports large-scale data collection with cloud services.

- Comprehensive tutorials and community resources.

Cons

- Cloud service pricing may be expensive for heavy usage.

- Certain advanced scraping scenarios require technical know-how.

- The desktop app might be resource-intensive on low-end machines.

10. Selenium

Selenium is an open-source browser automation tool widely used for testing, but also powerful for web scraping, especially sites with heavy JavaScript or interactive features like Reddit. Unlike traditional scrapers, Selenium controls a real browser instance, enabling it to navigate dynamically generated content and affect user behavior to avoid blocks.

Key Features

- Full Browser Automation: Controls Chrome, Firefox, and other browsers for precise interaction.

- JavaScript Execution: Able to scrape content rendered via JavaScript frameworks.

- User Interaction Simulation: Mimics real users by clicking, scrolling, and form-filling.

- Cross-Platform: Compatible with multiple OS and programming languages.

Pros

- Highly flexible with control over the entire browsing session.

- Ideal for scraping dynamic, interactive web pages.

- Extensive language and platform support.

Cons

- Slower than traditional scrapers due to full browser overhead.

- Requires programming skills and setup.

- Less scalable for very large data extraction due to resource consumption.

Why is Reddit Data Extraction Important?

- Access to Real-Time Opinions: Reddit data captures raw user thoughts and reactions on countless topics immediately.

- Market & Competitor Insights: Extracted data helps analyze trends, customer preferences, and competitor activities for strategic decisions.

- Sentiment & Social Analysis: Reddit data fuels sentiment tracking and social trend monitoring to gauge public mood and emerging issues.

- Source for AI & Research: The vast text and behavioral data support training for AI models and deep community understanding.

- Comprehensive Context: Posts, comments, and media provide full conversational context for better data-driven conclusions.

Conclusion

In 2025, scraping Reddit data requires tools that are adaptive and powerful enough to overcome constant platform updates and strict anti-bot defenses. The scrapers reviewed here each excel by offering unique strengths in reliability, ease of use, scale, and security.

Selecting the right Reddit scraper depends on data volume needs, technical capability, and project goals. Smaller-scale projects benefit from intuitive, ready-to-use scrapers, while large-scale extraction demands advanced proxy control and anti-blocking mechanisms. Regardless of choice, continuous monitoring and adaptation remain mandatory to sustain Reddit access as the platform evolves.

Use these insights to efficiently capture Reddit data with minimal effort and maximum security.

Check out our other blogs for more such informative content–

- Best Proxy Manager Platforms in 2025

- Forward Proxy vs Reverse Proxy: What’s the Difference?

- Top Web Scraping Proxies in 2025

FAQs

A Reddit scraper is a program or script that collects large amounts of data from Reddit without manual browsing. It can access posts, comments, user details, and subreddit information for research or personal projects, using Reddit’s API or by parsing the website directly.

Reddit forbids unauthorized scraping in its terms of service. Running scrapers that ignore these rules can lead to losing access, getting IP addresses blocked, or having accounts permanently suspended. Relying on official APIs with required permissions is the permitted way to access Reddit data.

A Reddit scraper commonly gathers titles and bodies of posts, post times, usernames of posters, comment threads, upvote counts, and full subreddit details. Advanced scrapers can also extract nested replies, awards given to posts, and the history of user activity.

Using Reddit scrapers comes with risks, including possible bans from the site, legal trouble if personal or sensitive data is misused, and incomplete data if Reddit changes its site layout or API settings. Improper scraping may also cause data loss if scripts are not coded to handle failures.

Disclosure – This post contains some sponsored links and some affiliate links and we may earn a commission when you click on the links, at no additional cost to you.