With over 200 million active websites and new pages popping up every second, finding the right information can feel like searching for gold in a digital haystack. Web crawlers make this task easier. They help you collect and organize data from across the web with speed and accuracy.

Let’s dive into the best web crawler tools of 2025 that are changing the way we explore and use the web.

What is a Web Crawler?

A web crawler is like a digital bot that moves systematically from one web page to another, following links, and mapping the structure of the internet.

Here, things often get mixed up: crawling and scraping aren’t the same thing.

- Crawling is about discovery. A crawler scans web pages to understand how they connect, much like Google’s bots mapping the web to build search results. It’s all about finding and organizing information.

- Scraping, on the other hand, is about extraction. A scraper collects specific data, like product prices, reviews, or emails, and turns it into a structured format that you can analyze or store.

In simpler words, crawlers explore the web. Scrapers collect what’s valuable from it.

Why Use Web Crawlers in 2025

So, what makes web crawlers so valuable today? Let’s break it down:

1. SEO Audits & Broken Link Detection

Keeping your website in top shape starts with knowing what’s working and what’s not. Web crawlers can scan every page of your site in minutes, flagging broken links, duplicate content, and performance issues that might impact your rankings.

2. Large-Scale Website Indexing

For content-heavy platforms, news aggregators, or research projects, manually tracking thousands of pages is impossible. Crawlers automate this process, mapping site structures and building organized indexes that make massive datasets manageable.

3. AI Training Data Collection

As generative AI and large models (LLMs) continue to dominate tech conversations, the demand for high-quality real-time data has skyrocketed. AI systems thrive on diverse, updated information, and web crawlers are how organizations feed AI models with structured, fresh data.

4. Content Discovery & Compliance Checks

Brands and media houses use crawlers to monitor new content, ensure compliance with copyright laws, or even detect unauthorized use of their material across the web.

Legal & Ethical Considerations

To stay compliant and crawl responsibly, here’s a quick checklist:

1. Respect robots.txt

Always check the robots.txt file before crawling.

2. Avoid Personal Data

Steer clear of collecting names, emails, or sensitive user information unless you have explicit consent.

3. Use Reasonable Request Intervals

Bombarding servers with thousands of requests per second can slow down or crash websites.

4. Attribute Your Sources

If you’re redistributing or publishing crawled public data, credit the original site.

Comparison Table of Top Web Crawler Tools in 2025

| Platform | Best For | Ease of Use | Ideal User |

| ParseHub | Beginners & No-Code Users | 4/5 | Marketers, Students |

| Octoparse | Automation & Scheduling | 4/5 | E-Commerce, Analysts |

| WebHarvy | Visual Extraction | 4/5 | Researchers, Marketers |

| Scrapy | Developers & Data Scientists | 2/5 | Developers, AI Teams |

| Apify | Automation & API Workflows | 3/5 | Developers, Data Teams |

| Diffbot | AI & Structured Data | 2/5 | Enterprises, AI Labs |

| Screaming Frog | SEO Audits | 4/5 | SEOs, Webmasters |

| 80legs | High-Volume Crawling | 3/5 | Data Engineers, Researchers |

| Sitebulb | Visual SEO Audits | 4/5 | SEO Teams, Agencies |

Top Web Crawler Tools in 2025

| Table of Contents |

| Beginner-Friendly & No-Code Crawlers |

| 1. ParseHub |

| 2. Octoparse |

| 3. WebHarvy |

| Developer & Power-User Crawlers |

| 1. Scrapy |

| 2. Apify |

| 3. Diffbot |

| SEO & Site Auditing Crawlers |

| 1. Screaming Frog |

| 2. 80legs |

| 3. Sitebulb |

Beginner-Friendly & No-Code Crawlers

1. ParseHub

If you’re stepping into the world of data collection, ParseHub is your go-to starting point. It’s a no-code web crawler designed for simplicity. You can extract data visually without touching a single line of code.

Key Features:

- Point-and-Click Interface: Simply click on the elements you want, and ParseHub does the rest.

- Free Tier with Generous Limits: Crawl up to 200 pages per hour for free, perfect for small projects and learning purposes.

- Built-In Proxy Support: Keeps your crawls stable and reduces the risk of IP bans during extraction.

- Multi-Format Data Export: Download your extracted data as CSV, Excel, or JSON in just one click.

- Works on Dynamic Websites: Can handle AJAX and JavaScript-heavy pages better than most entry-level crawlers.

Cons:

- Not Ideal for Complex Sites: Performance can slow down when dealing with multi-layered or dynamically loaded sites.

- Limited Customization: Advanced users might find its automation options too restricted compared to scripting tools.

Review: ParseHub makes web crawling easy, accessible, and beginner-friendly. It’s ideal for anyone who wants structured data without getting lost in technical setups. A reliable pick for marketers, students, and researchers starting out in 2025.

2. Octoparse

If you want to take web crawling a step further without diving into code, Octoparse is your power tool. Known for its ready-to-use templates, smart scheduling, a smooth interface, it’s ideal for users who want efficiency and automation without the tech hassle.

Key Features:

- Pre-Built Extraction Templates: Find hundreds of ready-made templates for popular sites.

- Task Scheduling: Plan crawls ahead of time with built-in schedulers, allowing data extraction to run smoothly.

- Local & Cloud Crawling Options: Run tasks locally on your machine or scale up with cloud-based crawling for faster performance.

- CAPTCHA & Proxy Handling: Comes with IP rotation and smart anti-blocking mechanisms that keep your crawls smooth.

- Intuitive Drag-and-Drop Workflow: The user interface is visually structured, letting you build complex crawls just by dragging elements around.

Cons:

- Limited Free Cloud Crawls: While local tasks are free, cloud-based automation features require a paid plan.

- Heavy Software Setup: As a desktop app, Octoparse can be resource-intensive on older systems.

Review: Octoparse stands out for bridging the gap between beginner ease and professional depth. Its templates and automation tools make it perfect for marketers, e-commerce analysts, and SEO teams who need recurring crawls.

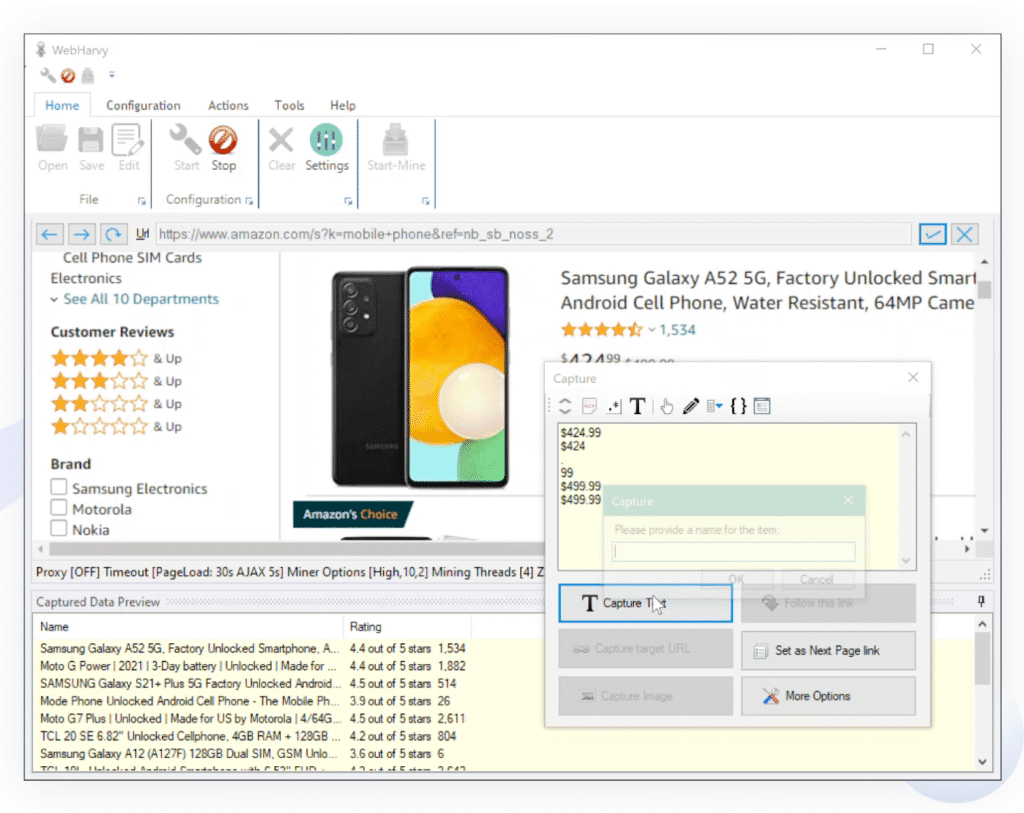

3. WebHarvy

If you love simplicity and visuals, WebHarvy is a web crawler that feels perfect. It automatically identifies patterns in web data, so you can extract images, text, or links just by clicking. It is perfect for anyone who prefers a visual, no-code approach to data collection.

Key Features:

- Pattern Detection Technology: WebHarvy smartly detects repeating data patterns and extracts them automatically.

- Visual Point-and-Click Selection: Select data directly on the webpage, and let the software capture similar elements instantly.

- Supports Images, Texts & Links: Unlike many basic crawlers, WebHarvy can scrape images and multimedia content effortlessly.

- Built-In Browser Interface: You can browse, select, and scrape all within WebHarvy’s built-in browser window.

- Easy Export Option: Export scraped data in CSV, XML, JSON, or Excel formats for seamless use in analytics or reporting.

Cons:

- Windows-Only Software: Currently, WebHarvy is has limited accessibility for non-Window users.

- Limited Advanced Automation: It’s not designed for large-scale or multi-layered crawling tasks.

Review: WebHarvy shines as one of the most user-friendly and visually intuitive web crawlers in 2025. If your projects are focused on image-heavy or visual data, WebHarvy’s automation magic makes it a top contender.

Developer & Power-User Crawlers

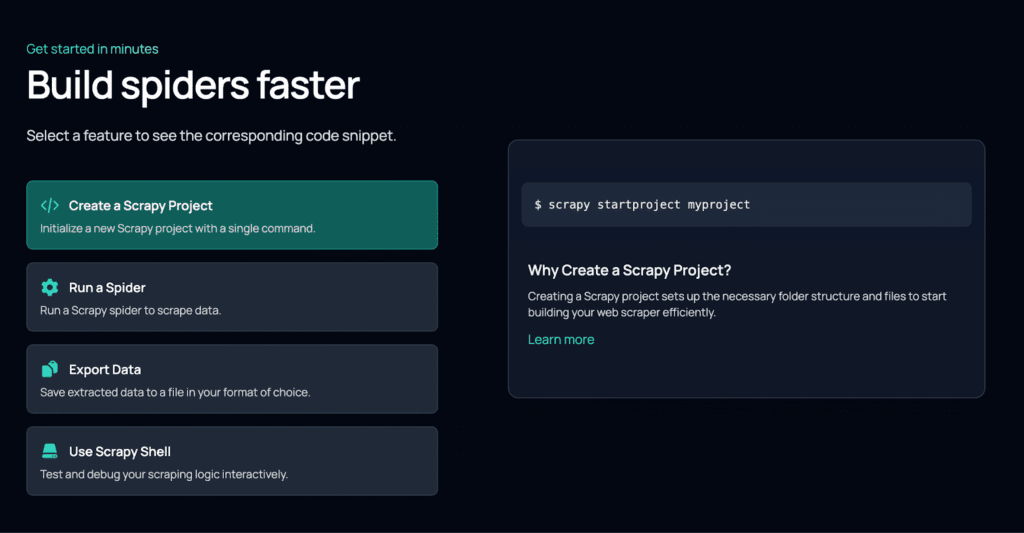

1. Scrapy

For developers and data professionals who love having total control, Scrapy is a powerful tool. It’s an open-source Python framework built for high-performance web crawling and scraping at scale, making it the go-to choice for serious data projects in 2025.

Key Features:

- Open-Source and Free: Scrapy is completely free and backed by a large community.

- Lightning-Fast Crawling: It allows you to crawl thousands of pages per minute with asynchronous requests.

- Modular and Extensible Design: Create custom middlewares, integrate APIs, or add your own logic.

- Built-In Data Pipelines: Automatically clean, process, and export data into JSON, CSV, or databases right after extraction.

- Strong Proxy & Anti-Ban Integration: Works seamlessly with proxy providers and user-agent rotation to minimize blocks during large-scale crawls.

Cons:

- Requires Programming Knowledge: You’ll need to be familiar with Python to get started.

- Steeper Learning Curve: It takes time to master compared to no-code or template-based crawlers.

Review: Scrapy is any developer’s dream crawler; it is fast, flexible, and infinitely customizable. Though it demands some coding knowledge, the payoff is massive scalability and precision.

2. Apify

If you’re looking for developer-friendly crawler that plays well with automation and APIs, Apify is a top-tier choice. Built on Node.js , it’s designed for scale, offering flexibility for coders and convenience for teams that need to run web crawls, scrapers, or bots on autopilots.

Key Features:

- Node.js-Based Framework: Apify runs on JavaScript, making it ideal for developers comfortable with Node.js and asynchronous web automation.

- API-Driven Workflows: You can trigger, monitor, and manage crawls through REST APIs.

- Smart Proxy Rotation: Apify’s built-in proxy management automatically switches IPs to avoid bans and keep large crawls running smoothly.

- Actor System for Custom Bots: Create and deploy your own “actors,” automated bots that can scrape, crawl, or interact with websites dynamically.

- Scalable Cloud Infrastructure: Run crawls locally or in the Apify Cloud, where you can schedule, parallelize, and scale.

Cons:

- Technical Setup for Non-Coders: Though documentation is strong, non-developers may find setup intimidating.

- Paid Scaling Costs: Large-scale automation and proxy usage can get pricey for high-volume operations.

Review: Apify is the definition of modern, automated web crawling. With its mix of developer flexibility, robust APIs, and smart scaling, it’s perfect for data teams, AI developers, and automation-driven businesses.

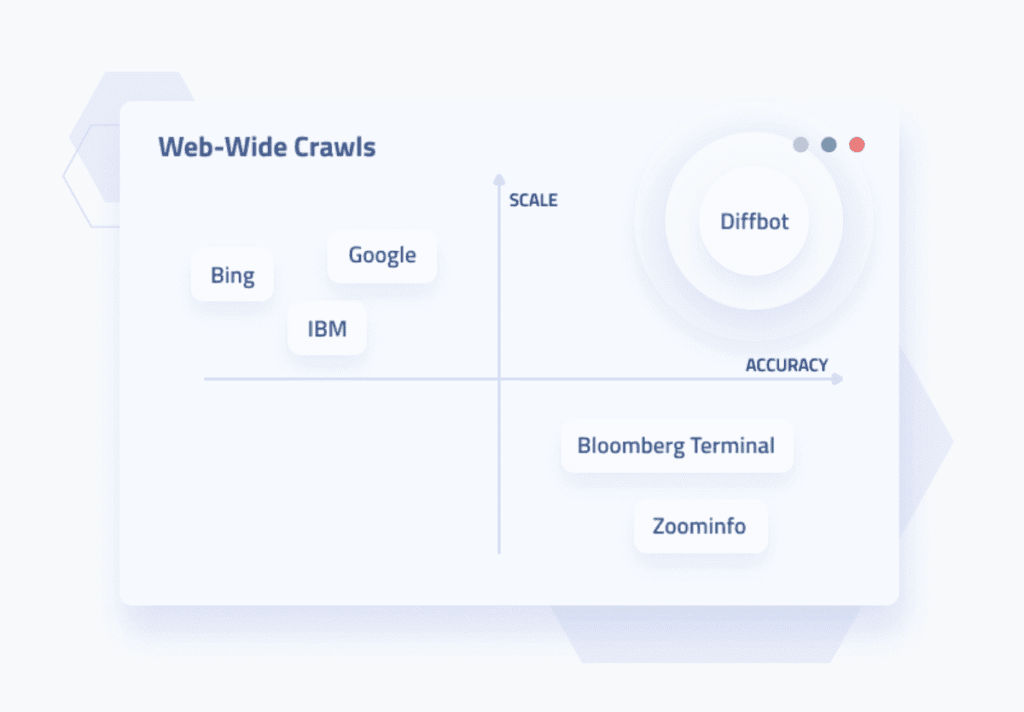

3. Diffbot

If your goal is not just crawling but turning the web into a structured database that powers analytics, AI, or knowledge graphs, then Diffbot is the perfect choice. Built with computer vision, machine learning, and NLP, it’s made for enterprises and data-driven teams.

Key Features:

- AI-Powered Automatic Extraction: Diffbot uses machine vision and NLP to classify web pages and pull out structured fields.

- Massive Knowledge Graph: It maintains a vast database of entities, letting you enrich or query data beyond simple scraping.

- Seamless API Access: You can trigger crawls, extract entities, and integrate the output via REST APIs.

- Scale & Bulk Crawling Capabilities: It supports whole-site crawls, multi-page link following, and bulk data collection.

- Structured Outputs & Enrichment: Beyond raw data, the platform offers context like sentiment, entity relationships, normalized fields.

Cons:

- Higher Cost & Enterprise Orientation: The pricing model and feature-set may be overkill for small teams or simple use-cases.

- Steeper Learning Curve: While the integration is automated, getting full value can require more technical effort.

Review: Diffbot stands out as a premium, enterprise-grade solution for turning the web into structured, actionable datasets. For 2025’s data-driven world, its intelligence and scale make it a logical choice when you’re playing in the big league.

SEO & Site Auditing Crawlers

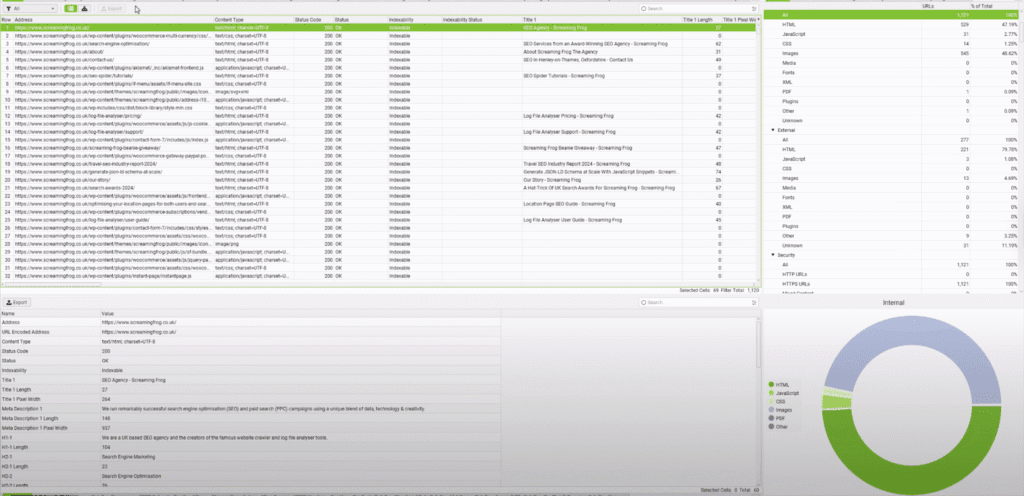

1. Screaming Frog

When it comes to SEO-focused web crawling, nothing beats Screaming Frog. This desktop-based crawler dives deep into your site, helping you uncover broken links, duplicate content, and hidden SEO issues that could be hitting your rankings.

Key Features:

- Comprehensive SEO Auditing: Crawl every corner of your site to detect broken links, missing tags, redirects, and errors with 100% accuracy.

- Metadata & On-Page Insights: Instantly extract titles, meta descriptions, headers, and word counts, giving you a complete SEO health check.

- XML Sitemap Generation: Create and validate XML sitemaps that help search engines crawl your site efficiently.

- Integration with Google Tools: Connect to Google Analytics, Search Console, and PageSpeed Insights for data-rich SEO analysis.

- Custom Filering & Reports: Use advanced filters, regex matching, and export features to tailor your crawl reports for clients or internal audits.

Cons:

- Desktop-Based Limitations: Requires a local installation and can be resource-heaby on large sites with tens of thousands of URLs.

- Steep Learning Curve: Its extensive features can be overwhelming for users new to SEO or technical site audits.

Review: Screaming Grog is built for professionals who want full visibility into their site’s structure and SEO performance. While it’s not as beginner-friendly as some cloud tools, its depth and reliability make it a must-have in every SEO toolkit in 2025.

2. 80legs

If you’re looking for a web crawling platform that can handle very large URLs even on free plans, 80legs is worth a close look. It’s a cloud-based service designed for high-volume, custom crawls without starting from scratch.

Key Features:

- High URL Limit for Free Users: The free tier lets you crawl up to 10K URLs per crawl.

- Cloud-Based, Distributed Architecture: Runs on a global grid of machines, enabling large-scale crawling across many domains.

- Customizable Crawl Jobs: You can define your own crawl patterns, what to extract, how deep to go.

- Data-as-a-Service Option: If you don’t want to build crawls yourself, you can pull from their continuous web crawl.

Cons:

- Less Beginner-Friendly UI: Non-technical users may find the setup and options more complex.

- Cost Scales Quickly for High Volume: Enterprise-grade crawling comes with tighter pricing.

Review: 80legs stands out for its scale and flexibility, especially if you’re somewhat comfortable with technical setup and need to crawl large URL volumes in 2025. For smaller projects or beginners, it might feel over-engineered, but for data teams, it’s a strong contender.

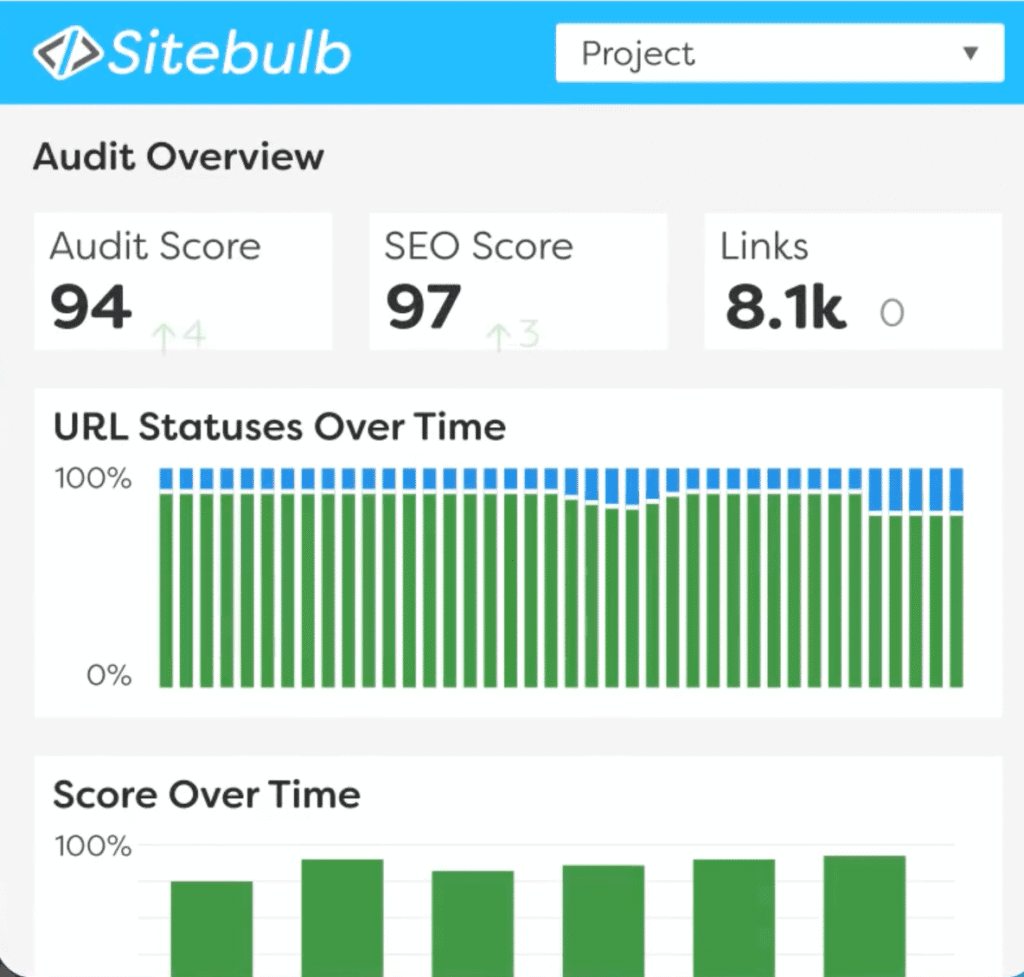

3. Sitebulb

If you’re looking for a crawler that helps you see and interpret problems visually, then Sitebulb is a strong contender. Designed with both technical SEO professionals and marketers in mind, it combines powerful site-crawling with an intuitive interface that makes easy work of complex audits.

Key Features:

- Intelligent “Hints” Prioritization: Sitebulb triggers over 300 SEO issues and sorts them by priority.

- Visualisations & Crawl Maps: See your site’s structure with visuals like a crawl map and directory tree.

- Large-Scale Crawling: Handles big websites via the cloud version, with full support for JS frameworks.

- Full Audit History & Comparison: Track how your site’s health changes over time, compare audits side by side, and show progress to clients.

- Custom Data Extraction: Use point-and-click to extract specific datapoints, and generate client-friendly PDF reports or exports.

Cons:

- Cost Intensive: The price is steep for smaller websites, and large crawls can be resource-heavy.

- Learning Curve: While the UI is friendly, advanced configurations and large-site optimisations may require a bit of time to master.

Review: Sitebulb is valuable for SEO professionals who must interpret complex site data and turn it into easy-to-understand insights for teams or clients. If you’re working with mid-to-large websites or need strong reporting visuals, Sitebulb hits the sweet spot between usability and depth.

Common Challenges & How to Fix Them

| Challenge | Likely Cause | Fix |

| CAPTCHA Blocking | Rapid requests from same IP | Use rotating proxies |

| Incomplete Data | JS-heavy sales | Use headless browsers |

| Crawling Limits | Free plan restriction | Use API or paid plan |

| Legal Warnings | Ignoring robots.txt | Respect site crawling policies |

Before you start your next crawl, set clear objectives, use ethical practices, and choose the tool that lets you turn raw web data into meaningful insights. In a world driven by data and AI, efficient crawling isn’t just a tech skill; it’s your edge in understanding the digital universe.

If the advancements in the AI field excite you, check out some of our expert guides here:

- How to Train GPT Models in 2025: Tools, Techniques, and Common Challenges

- Google AI Mode: How Should Marketers Optimize Better in 2025

- Best AI Visibility Tools for Brand Tracking in ChatGPT and LLMs

FAQs

Yes, as this can help maximize efficiency and coverage. For instance, you can use Scrapy for building custom crawlers and integrate it with Bright Data for proxy rotation or Apify for automation and cloud deployment.

Modern crawlers have evolved to deal with dynamic web content and security barriers:

– JavaScript-Heavy Sites: Tools like Octoparse, WebHarvy, and Sitebulb support headless browser rendering to accurately capture dynamic content.

– CAPTCHA-Heavy Sites: Tools such as Apify, Scrapy, and 80legs can integrate with rotating proxy services or CAPTCHA-solving APIs to bypass soft blocks.

Yes, several enterprise-level tools prioritize compliance:

– Diffbot is GDPR-compliant and operate under strict data privacy frameworks.

– Apify offers data protection agreements (DPA) and adheres to SOC 2 standards for secure cloud operations.

If your goal is to feed data directly into AI or analytics workflows:

– Scrapy integrates naturally with Python

– Apify offers REST APIs

– Diffbot has Knowledge Graph API

Disclosure – This post contains some sponsored links and some affiliate links, and we may earn a commission when you click on the links at no additional cost to you.