Artificial intelligence has come a long way, and GPT models are leading the charge. From generating human-like text to powering customer support, marketing, education, and even creative writing, these models have become the backbone of modern AI applications.

In 2025, training GPT models is no longer limited to research labs or tech giants. With open-source frameworks, scalable cloud solutions, and user-friendly tools, more professionals and organizations can now fine-tune or train their own GPT models for specific use cases.

In this article, we’ll walk you through everything you need to know about training GPT models in 2025. This guide will help you navigate the process confidently.

What GPT Training Actually Means

Training a GPT model can mean a few different things, and understanding the distinction is key before diving in. Broadly, there are three approaches:

1. Pre-Trained Models

These are the GPT models with massive models trained on billions of words from books, websites, and other text sources. They already understand grammar, context, and general knowledge, making them ready for a wide range of applications out of the box.

2. Fine-Tuned Models

Fine-tuning is like giving a pre-trained GPT model a specialty course. You start with a general model and train it further on a specific dataset. For example, customer support chats, medical reports, or product descriptions. The model retains its general language understanding but becomes sharper and more useful in your particular domain.

3. Customized Models

This goes a step beyond fine-tuning. Here, organizations adjust the model’s behavior, tone, or response style to align with a brand, user experience, or regulatory requirement. Customization often involves additional training techniques, prompt engineering, and careful evaluation to make sure the model performs exactly as intended.

At the heart of all of this is transformer architecture, the technology that powers GPT. Transformers allow the model to understand context across long passages of text and make connections between words.

Today, most training doesn’t involve starting from scratch. Instead, the trend is adapting existing intelligence like leveraging pre-trained models and fine-tuning or customizing them for specific needs. This not only saves time and computational resources but also ensures that models perform reliably in real-world applications.

Why You Might Train Your Own GPT

Here’s why many choose to fine-tune or customize one for their own needs:

1. Domain Accuracy

Generic GPT models are smart, but they’re not experts in every field. Fine-tuning ensures your model understands the jargon, facts, and nuances of your specific industry. Businesses in healthcare, law, and customer support now rely on fine-tuned GPT for factual accuracy and compliance confidence.

2. Privacy & Compliance

Some industries deal with sensitive data that cannot leave secure environments. Training your own GPT, or fine-tuning a model on private datasets, ensures that confidential information never leaves your control, keeping you compliant with regulations like HIPAA or GDPR.

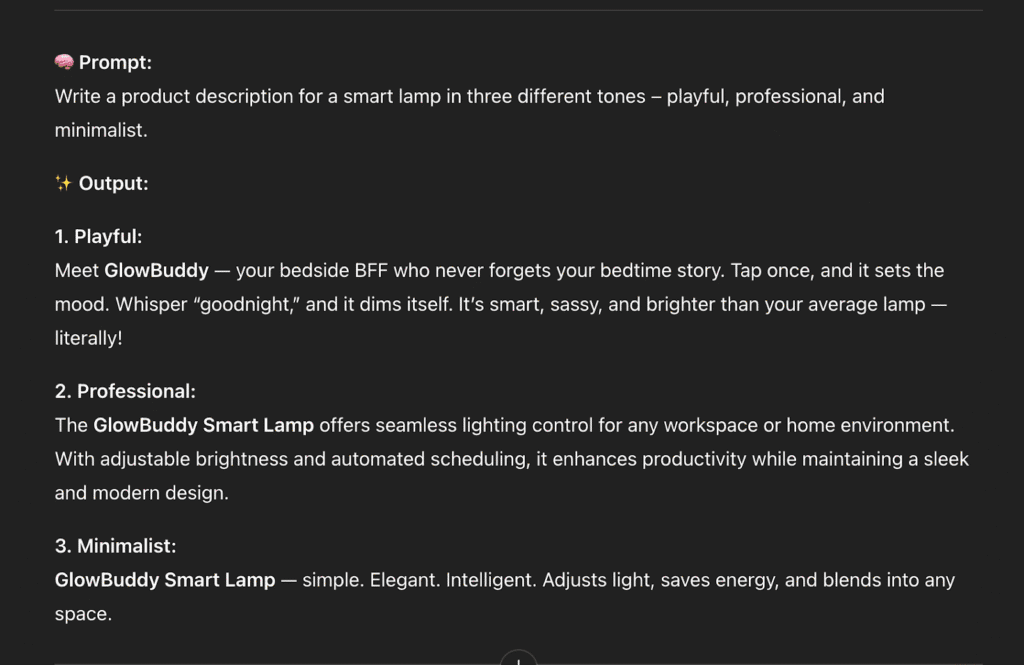

3. Brand Voice Consistency

Your customers recognize your tone, style, and personality. Customizing a GPT model ensures it communicates your brand’s voice, whether it’s professional, friendly, or playful, across emails, chatbots, and content creation.

4. Cost Optimization

Relying entirely on API calls to large pre-trained models can get expensive at scale. Fine-tuning or running your own model in-house can significantly reduce costs, especially when processing large volumes of text.

The 4 Modern Approaches to Training GPT Models

Depending on your goals, data, and resources, you might choose one or more of these approaches:

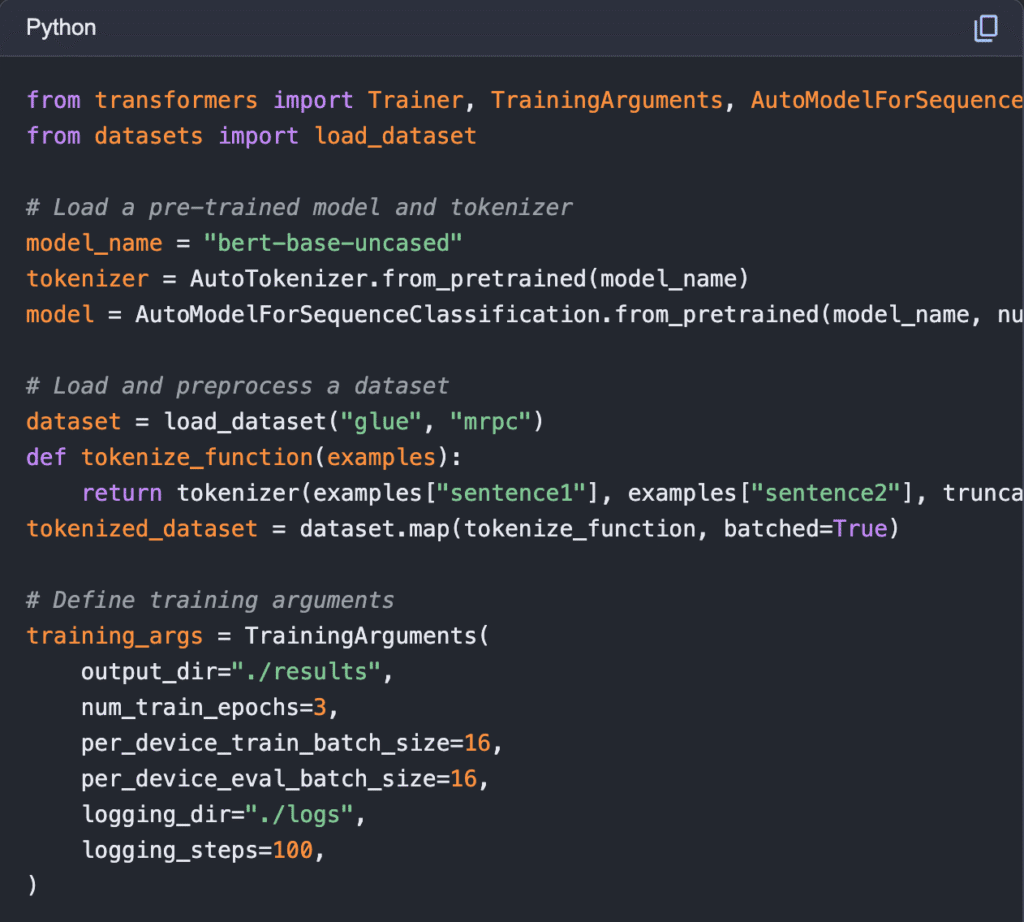

1. Fine-Tuning

Fine-tuning involves taking a pre-trained GPT model and training it further on your own dataset. This approach gives your model deep domain expertise and highly specialized knowledge.

Pros: High accuracy, domain-specific mastery

Ideal For: Industries like healthcare, legal, or technical support where precision is critical.

2. Retrieval-Augmented Generation (RAG)

RAG combines GPT with an external knowledge base, allowing the model to pull in up-to-date information on demand.

Pros: Keeps responses current without restraining the model constantly

Ideal For: Businesses where data changes frequently, like news platforms, product catalogs, or research databases.

3. Custom GPT Platforms (No-Code)

No-code platforms let you fine-tune and deploy GPT models without writing extensive code.

Pros: Rapid deployment, user-friendly interface, minimal technical overhead

Ideal For: Startups, marketing teams, or non-technical teams that need fast results without a dedicated ML team.

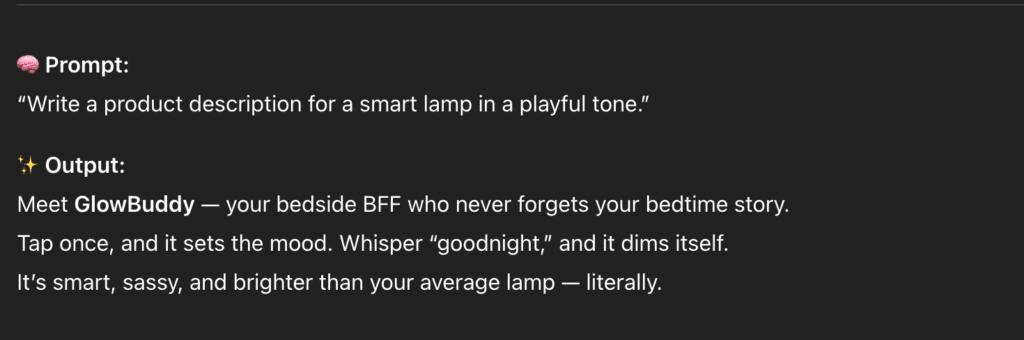

4. Prompt Engineering/Few-Shot Learning

Instead of training the model, you craft clever prompts or provide a few examples to guide GPT’s output.

Pros: Quick implementation, no heavy computing required

Ideal For: Projects with smaller datasets or when you need fast, iterative improvements without full model retraining.

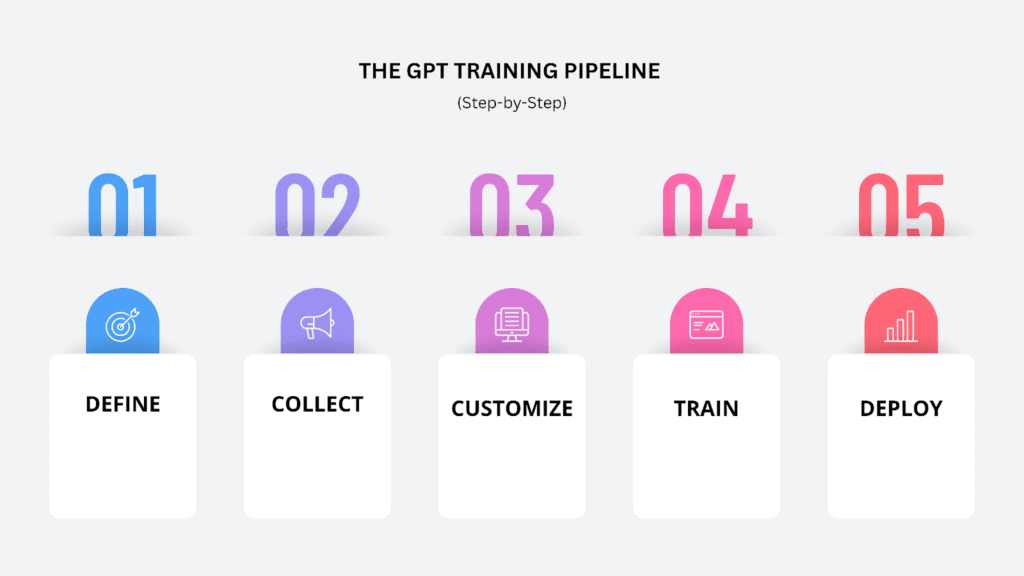

The GPT Training Pipeline (Step-by-Step)

Training a GPT model may sound complex, but breaking it into clear steps makes the process manageable. Here’s a practical pipeline to guide you from concept to deployment:

1. Define Goals

Start by clarifying your use case. Who will use the model? What problem should it solve? What does success look like? Is it faster responses, higher accuracy or a more consistent brand voice? Clear goals guide every step of the training process.

2. Collect and Prepare Data

High-quality data is the backbone of any GPT model. Focus on clean, structured, and relevant datasets. This will directly impact your model’s performance.

Collecting this data can be resource-intensive, especially when sourcing diverse, real-world information. Tools like Decodo’s Web Scraping API simplify the process. Decodo handles proxy rotation, CAPTCHA solving, and deduplication, giving your team a clean, scalable dataset without heavy engineering overhead.

For teams conducting deeper research or industry-specific analysis, Decodo’s range of residential, datacenter, and mobile proxies allows safer, more granular access to web data across geographies, ensuring your datasets are not only scalable but also contextually rich and representative.

3. Choose Your Customization Approach

Match your goal to the right training method. Need deep domain mastery? Go for fine-tuning. Want up-to-date answers without retraining? RAG might be the solution. For rapid deployment with minimal coding, no-code platforms work best. Prompt engineering and few-shot learning are perfect for quick, iterative improvements.

4. Train and Validate

Configure your model, run training sessions, and validate outputs. Testing and iterating are critical here, so monitor for accuracy, bias, and consistency. Small adjustments at this stage save huge headaches after deployment.

5. Deploy and Monitor

Once the model performs reliably, integrate it where it’s needed: APIs, chat widgets, internal tools, or customer support channels. Post-deployment monitoring is key to catching errors, tracking performance, and updating your model as new data comes in.

Common Challenges and How Teams Overcome Them

Training GPT models is powerful, but it comes with real-world challenges. Understanding them and knowing how to tackle them is key to a successful project.

1. Data Privacy & Security

Handling sensitive data requires strict protocols. Teams overcome this by anonymizing datasets, using secure cloud environments, and fine-tuning models in-house rather than exposing data externally. For regulated industries, this isn’t optional; it’s a necessity.

2. Compute and Cost Constraints

GPT models are resource-hungry. High-performance GPUs and large memory requirements can be expensive. Organizations often optimize costs by using cloud-based AI platforms, leveraging pre-trained models, or training smaller, specialized models instead of starting over.

3. Limited Technical Expertise

Not every team has ML engineers on hand. No-code platforms and guided fine-tuning tools make it possible for marketing, product, or operations teams to train models without deep technical expertise. Pairing these tools with clear workflows and documentation ensures smooth execution.

4. Context Window & Relevance

GPT models have limits on how much text they can remember at once. Teams address this by chunking data, leveraging Retrieval-Augmented Generation (RAG), or designing prompts that prioritize the most relevant information.

5. Brand Consistency & Tone

Ensuring the model speaks in your brand voice can be tricky. Custom fine-tuning, careful prompt design, and iterative testing help models maintain a consistent tone across all outputs.

6. Bias & Hallucination Risks

Models can sometimes produce biased or inaccurate outputs. Teams mitigate this by curating high-quality datasets, reviewing model outputs regularly, and implementing post-processing rules to catch errors before they reach customers.

Real-World Implementations

Here are a few ways organizations are putting these models to work in 2025:

1. Healthcare Copilots

Hospitals and clinics are using GPT-powered copilots to summarize patient records, suggest diagnoses, and even draft clinical notes, helping doctors make faster, more informed decisions.

2. E-Commerce Content Generators

Retailers and online marketplaces deploy GPTs to automatically create product descriptions, ad copy, and promotional content. This not only saves time but also ensures consistent brand messaging across thousands of listings.

3. Enterprise Knowledge Assistants

Large organizations are using GPT models as an internal assistant to manage documents, answer employee queries, and streamline workflows, reducing time spent hunting for information.

4. EdTech Learning Companions

Education platforms leverage GPTs to provide personalized study support, explain concepts in simpler terms, and generate practice questions, transforming how students interact with learning material.

Future Trends in GPT Training (2025 and Beyond)

Here’s what we’re seeing on the horizon:

1. Small, Specialized Models

Instead of relying solely on massive, general-purpose GPTs, organizations are developing smaller models focused on niche domains. These models are faster, more efficient, and excel at highly specific tasks.

2. Privacy-First Training

With increasing data privacy concerns, on-premise training and encrypted fine-tuning are becoming standard. Organizations can now customize models without ever exposing sensitive data externally.

3. Multi-Agent GPT Ecosystems

GPTs aren’t just working solo anymore. Multi-agent systems, where models collaborate to solve complex problems, are on the rise. This allows for more sophisticated workflows, from research assistance to business process automation.

4. Synthetic Data for Safe Fine-Tuning

Generating high-quality synthetic data helps teams fine-tune models without risking privacy or compliance issues. Synthetic datasets also allow for training on rare scenarios that would be hard to capture in real-world data.

At the heart of every successful GPT implementation is data. Whether you’re fine-tuning a model, setting up a retrieval pipeline, or experimenting with prompt engineering, the quality and structure of your data define the results. Platforms like Decodo make this part seamless, handling everything from scraping and deduplication to proxy rotation, so your AI starts learning from what sorted, complete datasets.

With the right approach, even small teams can leverage GPT models to improve workflows, enhance decision-making, and deliver experiences that feel intelligent, precise, and personalized. The future of AI isn’t just in building bigger models; it’s in training models that understand and serve your domain with clarity and confidence.

If you’re looking for more insights from the AI world, check out our other blogs:

- Claude vs ChatGPT: Which is Better in 2025? (Marketing Perspective)

- Google AI Mode: How Should Marketers Optimize Better in 2025

- Best AI Visibility Tools for Brand Tracking in ChatGPT and LLMs

FAQs

Success depends on your goals. Common metrics include accuracy, relevance, and response consistency for domain-specific tasks. You can also track ROI by measuring time saved, error reduction, or improved engagement in real-world use cases. Combining automated evaluation with human review often gives the best insights.

Cloud platforms like AWS, Azure, and Google Cloud provide scalable GPU/TPU resources, letting you pay for only what you use. Smaller teams can start with mid-range GPUs or leverage pre-trained models to reduce compute costs. Hybrid approaches, combining local testing with cloud deployment, balance performance and cost.

Treat your model like software. Use model versioning systems or platforms that track training runs, datasets, and fine-tuning checkpoints. Regularly test new versions before deployment to ensure stability and consistent outputs. Document changes clearly for team collaboration and reproducibility.

Yes. Depending on your industry and region, regulations like GDPR, HIPAA, or sector-specific AI guidelines may apply. Keep data anonymized, store it securely, and maintain audit logs. For sensitive sectors, training models on-premise or using encrypted datasets helps stay compliant.

Most GPT models can be accessed via APIs, allowing seamless integration into apps, chatbots, CRMs, or internal tools. Plan endpoints, authentication, and error handling carefully. You can also combine GPT outputs with workflow automation platforms for smooth, scalable adoption.

Absolutely. Using pre-trained models, fine-tuning with smaller datasets, or leveraging no-code/custom GPT platforms allows small teams to achieve high-quality results without massive infrastructure. Optimizing datasets and using cloud resources strategically reduces the compute burden.

Disclosure – This post contains some sponsored links and some affiliate links, and we may earn a commission when you click on the links at no additional cost to you.